Which role do metadata play in the semantic web? The latter aims at structuring the contents of the web in a way which makes it possible for machines to find and combine information. At present, there are two approaches which lead to this goal, one bottom-up and one top-down. In short, the bottom-up approach depends on metadata being added to web pages by content providers while in the top-down approach a third party crawls the web or a selection of sites and automatically generates a set of metadata for certain pages.

Today, a number of semantic web applications give a glimpse into the prospects of what can be done with highly structured data. It is interesting to note that all examples come from projects embracing the top-down approach, not from the bottom-up approach which asks for systematic assignment of metadata. I’d like to take a closer look at Freebase Parallax. The power of Parallax is to aggregate information from different sources and make it visible on one screen – in a parallel way (hence the name, I assume) instead of the usual sequential succession of sources. The data Parallax uses are mainly from the Wikipedia, so don’t expect any groundbreaking new facts. But it’s still quite an awesome application, at least when you stick to the examples used in the demo video. (When experimenting yourself, it is rather difficult to find good examples, but there are a few more on Infobib (in German).)

The first example from the demo video is a search for Abraham Lincoln’s children and shows how Parallax can arrange all four entries on one page instead of the user having to navigate to each single page.

Abraham Lincoln's Children on Parallax

In a next step, the narrator of the video expands his search to all children of all American presidents, showing Robert Todd Lincoln alongside with children from George Bush, Sr. and Jr., and then goes on to find all schools American presidents’ children attended.

Lincoln's son alongside the Bushes children

What has happened is that the Wikipedia entry on Abraham Lincoln was stripped of its context and reduced to two relationships: a) Abraham Lincoln was a president of the US, and b) Abraham Lincoln had children. When the same is done for the rest of the Wikipedia, then all entries with the attribute «American president» and «has children» can be combined. Like in a database, you can match all X which have an attribute Y (e.g. a school) – without even having to give this attribute Y a value (e.g. the name of a certain school).

The examples shows that the de-contextualization (or reduction of Robert Todd Lincoln to «child of US president») has great potential in terms of creating new associations of information. A number of Firefox extensions make use of this and combine information about books, films etc. with people who have visited the according web sites (Glue) or link terms to other sources according to their classificaton as person, company, country etc. (Gnosis). The re-contextualization, however, is tricky. If too much context is suppressed, the results become ridiculous, as the link from Abraham Lincoln (correctly identified as a person) to LinkedIn.

Gnosis' links for Abraham Lincoln

Also, the ability of machines to categorize information still seems to be very limited. Glue (called «BlueOrganizer» when I took the screenshot), for instance, can only recognize books on websites such as Amazon or Barnes & Nobles, but not on the Library of Congress’ site. And the insight of recognizing a category comes across to the human user as rather silly.

Blue Organizer recognizes that you're looking at a book!

This lack cannot be attributed to the absence of metadata. Zotero (mentioned earlier on this blog), for instance, manages to identify books on many more sites, of libraries as well as on-line bookshops. It just shows how much effort is necessary to recognize structure on the web as such. This is exactly what the top-down approach achieves: It identifies concrete questions (e.g. who else read this book?) as well as sources which might be relevant to answer them (e.g. sites which provide links from a book to people who have read it) and then creates new relationships between these data. This is a much more selective approach than the bottom-up one, and I believe it is more promising. The bottom-up approach either doesn’t go beyond today’s databases or it aims at the maximum amount of options for future relationships. The bottom-up approach is too unspecific to make it attractive and there are too many uncertainties about its usefulness. This, I belive, is the main reason why it isn’t being adopted. For reasons of semantics, I also doubt it will be possible to achieve useful results by automatically mapping data from heterogeneous sources via generic ontologies in the near future (see the above example of Abraham Lincoln in LinkedIn).

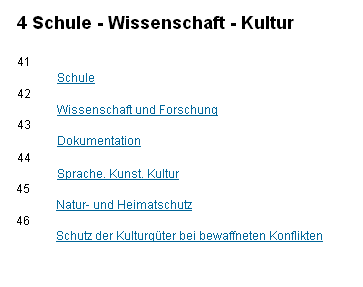

Maybe the bottom-up approach should be viewed without rigid technical requirements, but more openly, in the sense of carefully produced content which

- embeds information in its context (e.g. origin, use, related information) and/or

- structures content within a certain scope by using (more or less) standardized elements.

Standardized display of a city on Wikipedia

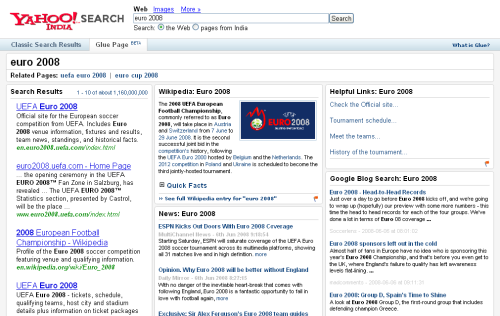

This leads me back to my initial question: Are metadata good for findability? The semantic web, at least at present, is another example that adding descriptors is neither popular with content producers nor seems to be considered crucial for automated processing. But metadata aren’t restricted to descriptors which are added to content; the features mentioned above are pure metadata and absolutely essential for creating structured search. And structured search, or variations of it like faceted navigation or or the semantic web applications mentioned above, have a great advantage over pattern-matching search engines. Instead of only being able to look for X, it is possible to look for something you do not exactly know except that it should have the characteristic Y.

Carefully structured, metadata-rich information may not be crucial for search engines which rely on pattern-matching. But it opens new perspectives for exploring related content, for finding answers without exactly knowing the question.